Our ACL paper bridging between formal semantics and computational linguistics can be now downloaded from ArXiv. We present a new task and results for training models to learn semantically-rich function words. We also analyze the role of linguistic context in both humans and the models, with implications for cognitive plausibility and future modeling work.

Abstract:

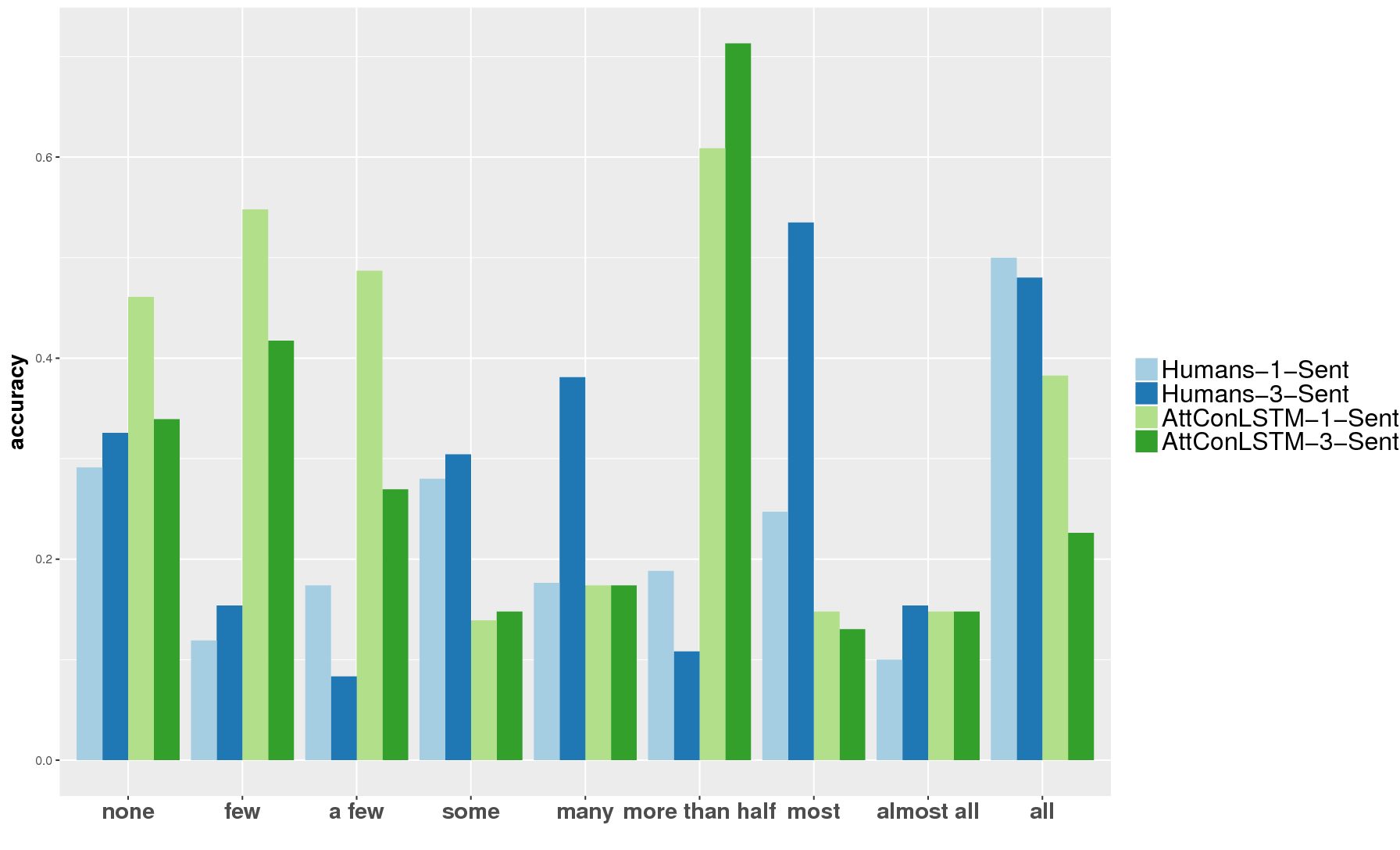

We study the role of linguistic context in predicting quantifiers (`few’, `all’). We collect crowdsourced data from human participants and test various models in a local (single-sentence) and a global context (multi-sentence) condition. Models significantly out-perform humans in the former setting and are only slightly better in the latter. While human performance improves with more linguistic context (especially on proportional quantifiers), model performance suffers. Models are very effective in exploiting lexical and morpho-syntactic patterns; humans are better at genuinely understanding the meaning of the (global) context.