In the paper forthcoming in Semantics & Pragmatics, Shane and I have proposed that semantic universals of quantification can be explained in terms of learnability. We claim that many semantic universals simply arise because expressions satisfying them are easier to learn than those that do not. We support the explanation by providing a model of learning — back-propagation through a recurrent neural network — which shows that quantifiers attested in natural language are easier to learn than similar quantifiers that do not satisfy universal constraints.

Two natural questions arise here:

- How does learnability exert pressure on language structure over time? In other words, how the learnability results explain the linguistic facts?

- Is learnability the fundamental explanation, or could the universals be explained by a general notion of complexity, which also explains the learnability facts?

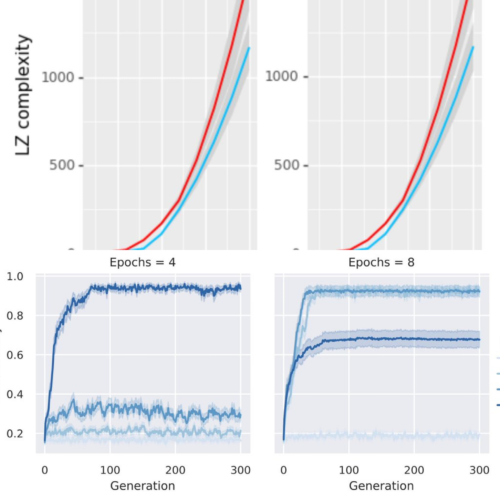

We (preliminarily) answer these questions in two recent papers just accepted for CogSci 2019. In the joint work with Shane and Fausto “The emergence of monotone quantifiers via iterated learning“, we show how iterated learning with neural agents explains why natural language quantifiers are monotone. In the joint paper with Iris and Shane “Complexity and learnability in the explanation of semantic universals of quantifiers”, we show that approximate Kolmogorov complexity also nearly explains quantifier universals. Still, the question remains, are learnability and complexity two independent sources of semantic universals or is one of the two the primary cause for cross-linguistic universals.